Common Issues

Services start failing to resolve

If the CloudOps for Kubernetes Jenkins and Nexus DNS hostnames stop resolving, this might be because the kube-proxy DaemonSet is unhealthy.

To check the status of the DaemonSet through the kubectl command:

kubectl --namespace kube-system describe daemonsets kube-proxy

You can also check the status of the kube-proxy DaemonSet in the kube-system namespace through the Kubernetes Dashboard.

- To access the Kubernetes Dashboard, see Accessing Kubernetes Dashboard.

DaemonSets in the Kubernetes cluster are failing

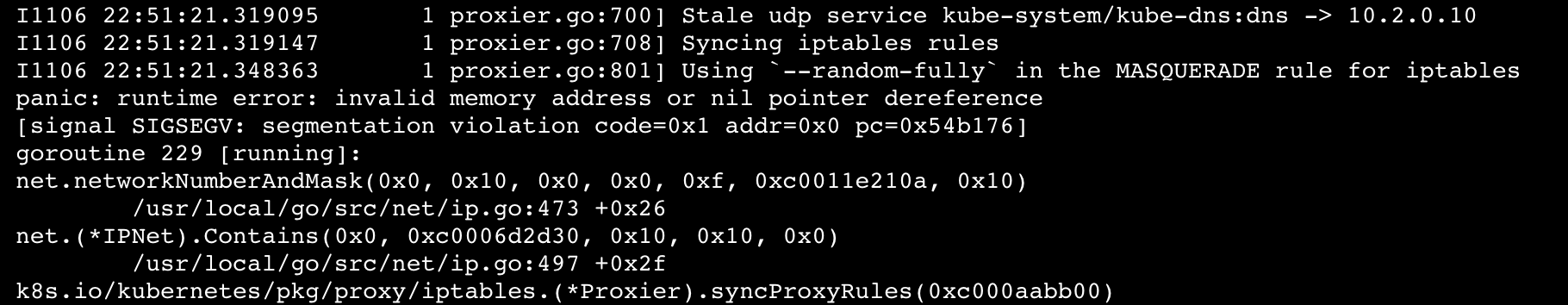

If DaemonSets are failing the Kubernetes cluster and the logs in the pods have the error:

Ensure that you check the resource reservation in the Kubelet configuration. The failures might be because the kubelet has run out of memory and started stealing from the DaemonSets.

To see the Kubelet configuration in a node,

Choose a node to inspect. In this example, the name of this node is referred to as NODE_NAME.

In one tab in the command terminal start the kubectl proxy.

kubectl proxy --port=8001Run the following command to get the configuration from the configuration endpoint:

note

The resources reserved for the Kubelet will be in the JSON object

kubeReserved.NODE_NAME="the-name-of-the-node-you-are-inspecting"; curl -sSL "http://localhost:8001/api/v1/nodes/${NODE_NAME}/proxy/configz" | jq '.kubeletconfig|.kind="KubeletConfiguration"|.apiVersion="kubelet.config.k8s.io/v1beta1"'

To see and compare the resources used by the DaemonSets in the node with the Kubelet configuration resources, do the following steps:

Run the following command with the same node used in the previous steps:

kubectl describe node ${NODE_NAME}

Running Out of Ephemeral Storage

Pods can run out of ephemeral storage when building large Self Managed Commerce deployment packages, depending on the size of the source code.

A typical message relating to this error in the build-deployment-package Jenkins job would have the following in the job logs:

default/jenkins-worker-51db7930-910b-4888-b88e-eeb68b987803-9wjfl-4k9ms Pod just failed (Reason: Evicted, Message: Pod ephemeral local storage usage exceeds the total limit of containers 13322Mi. )

When you experience this error, review the agent configuration Yaml file, which is referenced in the Jenkinsfile for the Jenkins job that is failing:

pipeline {

agent {

kubernetes {

yaml kubernetesPodTemplate(file: "maven-5gb-2core-1container.yaml", binding: ['dockerRegistryAddress': dockerRegistryAddress, 'agentImageTag': jenkinsAgentImageTag])

defaultContainer "docker"

inheritFrom "jenkins-agent"

}

}

...

}

The maven-5gb-2core-1container.yaml file, which will exist in the cloudops-for-kubernetes git project under directory resources/com/elasticpath, defines the agent configuration including the ephemeral storage resource definitions.

A solution would be to create a new configuration by copying maven-5gb-2core-1container.yaml to a new file, then update the copy to declare more ephemeral storage, and then update the Jenkins job to reference the new file. In the new file you can declare as much ephemeral storage as the job requires. For example, if you need to set the requests and limits at 30Gi:

resources:

limits:

memory: "5632Mi"

cpu: "2"

ephemeral-storage: "30Gi"

Use Git to commit and push your changes to your CloudOps for Kubernetes repository. You can now use the changes that you made in your repository in the failing Jenkins job. Continue this process until you find the right limits for the job that is failing.

Jenkins Will Not Start

If the CloudOps for Kubernetes Jenkins pod will not start, it could be due to incompatible Jenkins plugins. Use the procedure below to force Jenkins to use only the out-of-the-box plugins.

note

This procedure will remove any custom Jenkins plugins and related configurations that may be installed in your environment,

and only Jenkins plugins shipped with CloudOps for Kubernetes will remain.

The following procedure will modify the Jenkins start up steps, which are stored in a Kubernetes ConfigMap object named jenkins, in the default namespace. We will add a step that removes the additional plugins during the Jenkins start up.

Authenticate to the Kubernetes cluster so you can run the

kubectlcommand. For more information, see Log On To the Kubernetes Cluster.Make a backup of the ConfigMap.

kubectl get configmap jenkins -o yaml > jenkins.configmap.yamlOpen the ConfigMap in the kubectl editor. On Linux the default editor is

viand on Windows it isnotepad.kubectl edit configmap jenkinsFind the following lines. They should be on or around line #13.

echo $JENKINS_VERSION > /var/jenkins_home/jenkins.install.InstallUtil.lastExecVersion echo "download plugins"Insert the line

rm -rf /var/jenkins_home/plugins/*between the above two lines, so it now reads like below. If the linerm -rf /var/jenkins_home/plugins/*is already present, you do not need to add it again.echo $JENKINS_VERSION > /var/jenkins_home/jenkins.install.InstallUtil.lastExecVersion rm -rf /var/jenkins_home/plugins/* echo "download plugins"Save the changes and exit the editor.

Force Jenkins to restart.

kubectl delete pod jenkins-0Confirm Jenkins starts successfully.

If you will install additional plugins, then restore the ConfigMap to it’s previous state so that those plugins are not removed when Jenkins next restarts. Edit the config map and remove the line

rm -rf /var/jenkins_home/plugins/*. Save and exit.

Jenkins Configurations are Lost When Jenkins Restarts

If changes made in the Jenkins web interface are not retained when the Jenkins pod restarts, then follow the below procedure to prevent this by setting TF_VAR_jenkins_overwrite_config to false.

- Identify a maintenance window during which no Jenkins jobs will be running to apply the change. The Jenkins server pod will be restarted when the change is applied, causing any running jobs to fail.

- Obtain the most recent copy of the

docker-compose.override.ymlfile for your CloudOps for Kubernetes cluster. - Set the

TF_VAR_jenkins_overwrite_configvariable tofalse. If the variable does not exist in your copy ofdocker-compose.override.yml, you will need to add it. - (Optional) Set variable

TF_VAR_rebuild_nodegroupstofalseto avoid unnecessary pod redeployment. - Follow the procedure described in Updating Cluster Configuration to apply the change.

note

Some CloudOps for Kubernetes version upgrades may require resetting the Jenkins configuration. Ensure you are following the upgrade documentation specific to your CloudOps for Kubernetes version.