Search Server Clustering

Search Server Clustering

The EP Search Server provides product discovery (searching and browsing) for Cortex and catalog management for CM Server. This section provides advice for how to scale a Search Server deployment in a production environment. For staging environments, it is usually sufficient to have a single search master and no slaves.

Topology

Search Server is implemented using a master-slave topology. Only a single master can run at a time, otherwise database conflicts occur updating the TINDEXBUILDSTATUS table.

Two common deployment topologies:

- Small Deployment Topology - The simplest topology suitable for small deployments is to deploy a Search slave on every Cortex node. Then access Search Server via a http://localhost... URL.

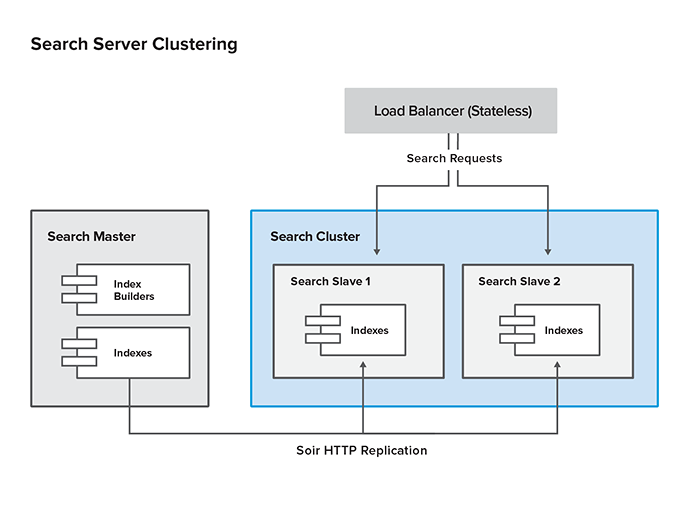

- Large Deployment Topology - Large deployments typically have a separate cluster of Search slaves behind a stateless load balancer. The cluster is accessed via the load balancer URL. As a starting point, EP recommends deploying one Search slave for every three Cortex servers to support catalog searching and browsing. This ratio may vary depending on the searching and browsing patterns for the store and customizations that change search server usage. A large deployment example is shown below:

Configuration

The Search master and slave are deployed using the same WAR file. By default, the WAR is configured to run as a master node with replication disabled. The steps below configure both master and slave.

1. Externalize solrHome directory (master & slave)

Move the solrHome directory from the Search WAR to an external location. We recommend doing this by including the solrHome in the deployment package as a separate artifact and then deploying the artifact to the desired location on the server. This has two benefits:

- Replication configuration can be added without invading the WAR

- Search indexes are not deleted when a new WAR is deployed

Once solrHome is deployed to the desired location, add the JVM parameter -Dsolr.solr.home=<solrHomePath> to the appserver startup script.

2. Configure master replication

Each config file in solrHome/conf has an <xi:include> tag that points to a corresponding replication configuration file.

<!-- include master/slave replication settings -->

<xi:include href="file:/etc/ep/product.solr.replication.config.xml" xmlns:xi="http://www.w3.org/2001/XInclude">

<xi:fallback>

<!-- Currently this reference is relative to the CWD. As of SOLR 3.1 it will be relative to the current XML file's directory -->

<xi:include href="../replication/product.solr.replication.config.xml" xmlns:xi="http://www.w3.org/2001/XInclude">

<!-- Silent fallback if no external configuration found -->

<xi:fallback />

</xi:include>

</xi:fallback>

</xi:include> In this case, Solr first looks for product.solr.replication.config.xml in /etc/ep and then in the ../replication relative path.The relative path is relative to where the Search server shell script is run.

If necessary, you can change the <xi:include> block in the Solr config files if /etc/ep is not an acceptable location.

Here is an example of the product.solr.replication.config.xml file.

<requestHandler name="/commerce-legacy/replication" class="solr.ReplicationHandler"> <lst name="master"> <!--Replicate on 'startup' and 'commit'. 'optimize' is also a valid value for replicateAfter. --> <str name="replicateAfter">startup</str> <str name="replicateAfter">commit</str> <!-- <str name="replicateAfter">optimize</str> --> <!--Create a backup after 'optimize'. Other values can be 'commit', 'startup'. It is possible to have multiple entries of this config string. Note that this is just for backup, replication does not require this. --> <!-- <str name="backupAfter">optimize</str> --> <!--If configuration files need to be replicated give the names here, separated by comma --> <str name="confFiles">schema.xml,stopwords.txt,elevate.xml</str> <!--The default value of reservation is 10 secs.See the documentation below . Normally , you should not need to specify this --> <str name="commitReserveDuration">00:00:10</str> </lst> </requestHandler>

Create separate config files for each index you want replicated. You don't have to replicate all indexes--just the ones that are actually needed. Here is the full list of config files:

| Index | Replication config file | Index used by |

|---|---|---|

| Category | category.solr.replication.config.xml | Cortex, CM Client |

| CM User | cmuser.solr.replication.config.xml | CM Client |

| Customer | customer.solr.replication.config.xml | CM Client |

| Product | product.solr.replication.config.xml | Cortex, CM Client |

| Promotion | promotion.solr.replication.config.xml | CM Client |

| Shipping Service Levels | shippingservicelevel.solr.replication.config.xml | CM Client |

| SKU | sku.solr.replication.config.xml | CM Client |

3. Configure slave replication

For each replication configuration file on the master, create a corresponding configuration file on the slave. Here is an example of the product.solr.replication.config.xml file:

<requestHandler name="/commerce-legacy/replication" > <lst name="slave"> <str name="enable">true</str> <!--fully qualified url for the replication handler of master . It is possible to pass on this as a request param for the fetchindex command--> <str name="masterUrl">http://venus.elasticpath.net:8080/searchserver/product/replication</str> <!--Interval in which the slave should poll master .Format is HH:mm:ss . If this is absent slave does not poll automatically. But a fetchindex can be triggered from the admin or the http API --> <str name="pollInterval">00:00:20</str> <!-- THE FOLLOWING PARAMETERS ARE USUALLY NOT REQUIRED--> <!--to use compression while transferring the index files. The possible values are internal|external if the value is 'external' make sure that your master Solr has the settings to honour the accept-encoding header. see here for details http://wiki.apache.org/solr/SolrHttpCompression If it is 'internal' everything will be taken care of automatically. USE THIS ONLY IF YOUR BANDWIDTH IS LOW . THIS CAN ACTUALLY SLOWDOWN REPLICATION IN A LAN--> <str name="compression">internal</str> <!--The following values are used when the slave connects to the master to download the index files. Default values implicitly set as 5000ms and 10000ms respectively. The user DOES NOT need to specify these unless the bandwidth is extremely low or if there is an extremely high latency--> <str name="httpConnTimeout">5000</str> <str name="httpReadTimeout">10000</str> <!-- If HTTP Basic authentication is enabled on the master, then the slave can be configured with the following <str name="httpBasicAuthUser">username</str> <str name="httpBasicAuthPassword">password</str> --> </lst> </requestHandler>

4. Disable indexing on slaves

Add the following line to the ep.properties file on each slave server to stop the slaves from indexing themselves.

ep.search.triggers=disabled

5. Enable the Search Master

Add the following line to ep.properties on the server containing the search master to identify which server contains the Search master.

ep.search.requires.master=true

6. Configure searchHost setting

Configure the searchHost server's URL through the system configuration setting: COMMERCE/SYSTEM/SEARCH/searchHost What URL you set depends on your deployment topology.

Simple topology

In a simple deployment topology, you only need to configure the default value to point to http://localhost:<port>/<contextPath>/.

Slave cluster topology

If the search slaves are clustered behind a load balancer, then you need two setting values. The default value identifies the slave cluster and an additional value identifies the search master.

| Context Path | Value |

|---|---|

| URL of the load balancer for the slave cluster | |

| master | URL of the search master |

Operational Instructions

See the following sections for operational instructions: