Common Issues

Services start failing to resolve

If the CloudOps for Kubernetes Jenkins and Nexus DNS hostnames stop resolving, this might be because the kube-proxy DaemonSet is unhealthy.

To check the status of the DaemonSet through the kubectl command:

kubectl --namespace kube-system describe daemonsets kube-proxy

You can also check the status of the kube-proxy DaemonSet in the kube-system namespace through the Kubernetes Dashboard.

- To access the Kubernetes Dashboard, see Accessing Kubernetes Dashboard.

DaemonSets in the Kubernetes cluster are failing

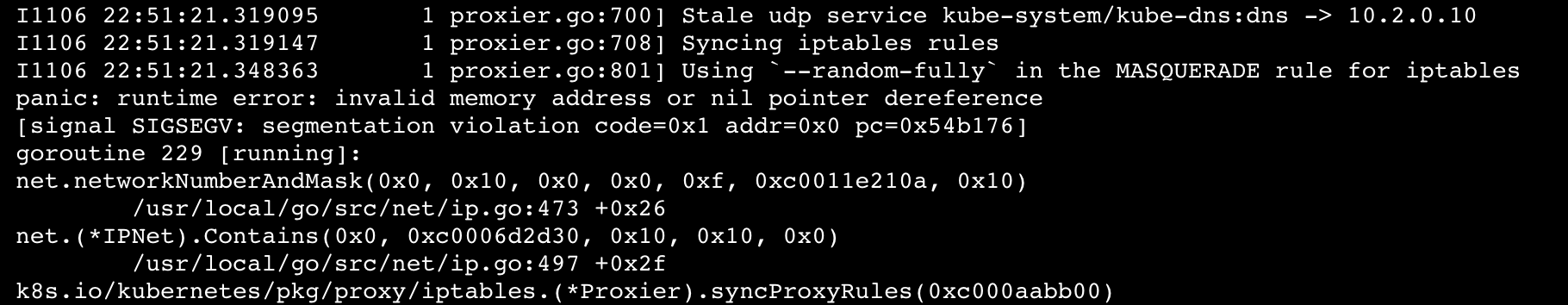

If DaemonSets are failing the Kubernetes cluster and the logs in the pods have the error:

Ensure that you check the resource reservation in the Kubelet configuration. The failures might be because the kubelet has run out of memory and started stealing from the DaemonSets.

To see the Kubelet configuration in a node,

Choose a node to inspect. In this example, the name of this node is referred to as NODE_NAME.

In one tab in the command terminal start the kubectl proxy.

kubectl proxy --port=8001Run the following command to get the configuration from the configuration endpoint:

note

The resources reserved for the Kubelet will be in the JSON object

kubeReserved.NODE_NAME="the-name-of-the-node-you-are-inspecting"; curl -sSL "http://localhost:8001/api/v1/nodes/${NODE_NAME}/proxy/configz" | jq '.kubeletconfig|.kind="KubeletConfiguration"|.apiVersion="kubelet.config.k8s.io/v1beta1"'

To see and compare the resources used by the DaemonSets in the node with the Kubelet configuration resources, do the following steps:

Run the following command with the same node used in the previous steps:

kubectl describe node ${NODE_NAME}

Running Out of Ephemeral Storage

Pods can run out of ephemeral storage when building large Self Managed Commerce deployment packages, depending on the size of the source code.

A typical message relating to this error in the build-deployment-package Jenkins job would have the following in the job logs:

default/jenkins-worker-51db7930-910b-4888-b88e-eeb68b987803-9wjfl-4k9ms Pod just failed (Reason: Evicted, Message: Pod ephemeral local storage usage exceeds the total limit of containers 13322Mi. )

When you experience this error, review the podYaml definition in the Jenkinsfile for the Jenkins job that is failing:

def podYamlFromFile = new File("${env.JENKINS_HOME}/workspace/${env.JOB_NAME}@script/cloudops-for-kubernetes/jenkins/agents/kubernetes/maven-5gb-2core-1container.yaml").text.trim();

String podYaml = podYamlFromFile.replace('${dockerRegistryAddress}', "${dockerRegistryAddress}").replace('${jenkinsAgentImageTag}', "${jenkinsAgentImageTag}")

For this error, edit the maven-5gb-2core-1container.yaml file.

Open the yaml file in any text editor and change the ephemeral storage resource definitions that are defined:

resources:

requests:

memory: "5632Mi"

cpu: "2"

ephemeral-storage: "13Gi"

limits:

memory: "5632Mi"

cpu: "2"

ephemeral-storage: "13Gi"

Add as much ephemeral storage as you require. For example, we need to set the requests and limits at 20Gi:

resources:

requests:

memory: "5632Mi"

cpu: "2"

ephemeral-storage: "20Gi"

limits:

memory: "5632Mi"

cpu: "2"

ephemeral-storage: "20Gi"

Use Git to commit and push your changes to your CloudOps for Kubernetes repository. You can now use the changes that you made in your repository in the failing Jenkins job. Continue this process until you find the right limits for the job that is failing.